EFDC+ is the DSI version of the EFDC that supersedes the version that was initially developed by the Virginia Institute of Marine Sciences and later supported by US Environmental Protection Agency (USEPA). We provided a brief review of the different versions of EFDC at our blog.

As we develop EFDC+, continuously adding features and providing bug fixes, it is essential to test the code using a batch of test cases to ensure code integrity and increase confidence in modeling results. Especially as what may seem like a small change can have an unforeseen impact on model results. This batch of test cases allows us to evaluate different aspects of EFDC+ and its application in a variety of cases.

However, manually running an adequate set of test models after each change in EFDC+, and evaluating the differences, is extremely resource intensive. To address this issue, we have developed a streamlined process that runs multiple test models, evaluates differences, and provides a detailed report for us to review. We can adjust a single variable, and within a couple of hours have dozens of test results demonstrating the effect of that change.

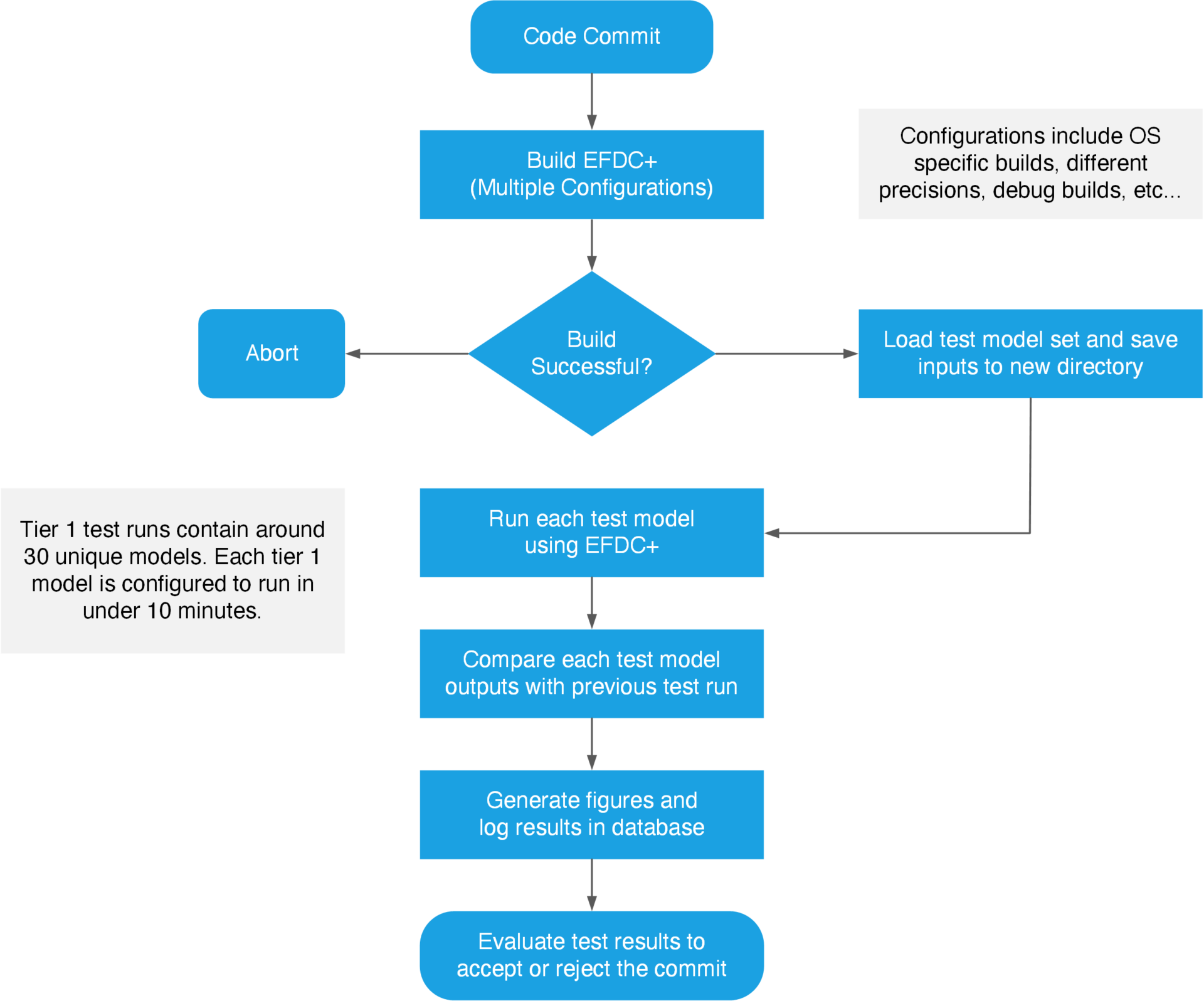

The following flowchart (Figure 1) describes our automated testing process for a single test run.

The process can be further described in the following steps:

- A commit (code change) is pushed to our remote git repository.

- This triggers our local TeamCity build server to build multiple configurations of EFDC+.

- If the build is completed successfully, the system then gets the latest related build of EFDC+ Explorer and uses it to load and save all of our test models into a new directory. This ensures that any necessary updates to input file formats are made.

- Once the models have all been saved into new directories, each model is run using the latest build of EFDC+.

- After the models have all run, a separate tool loads each model and performs a statistical comparison against another version of that model. Usually, this is against the last recent successful test run. This allows us to point to a specific commit in the event that a model result deviates.

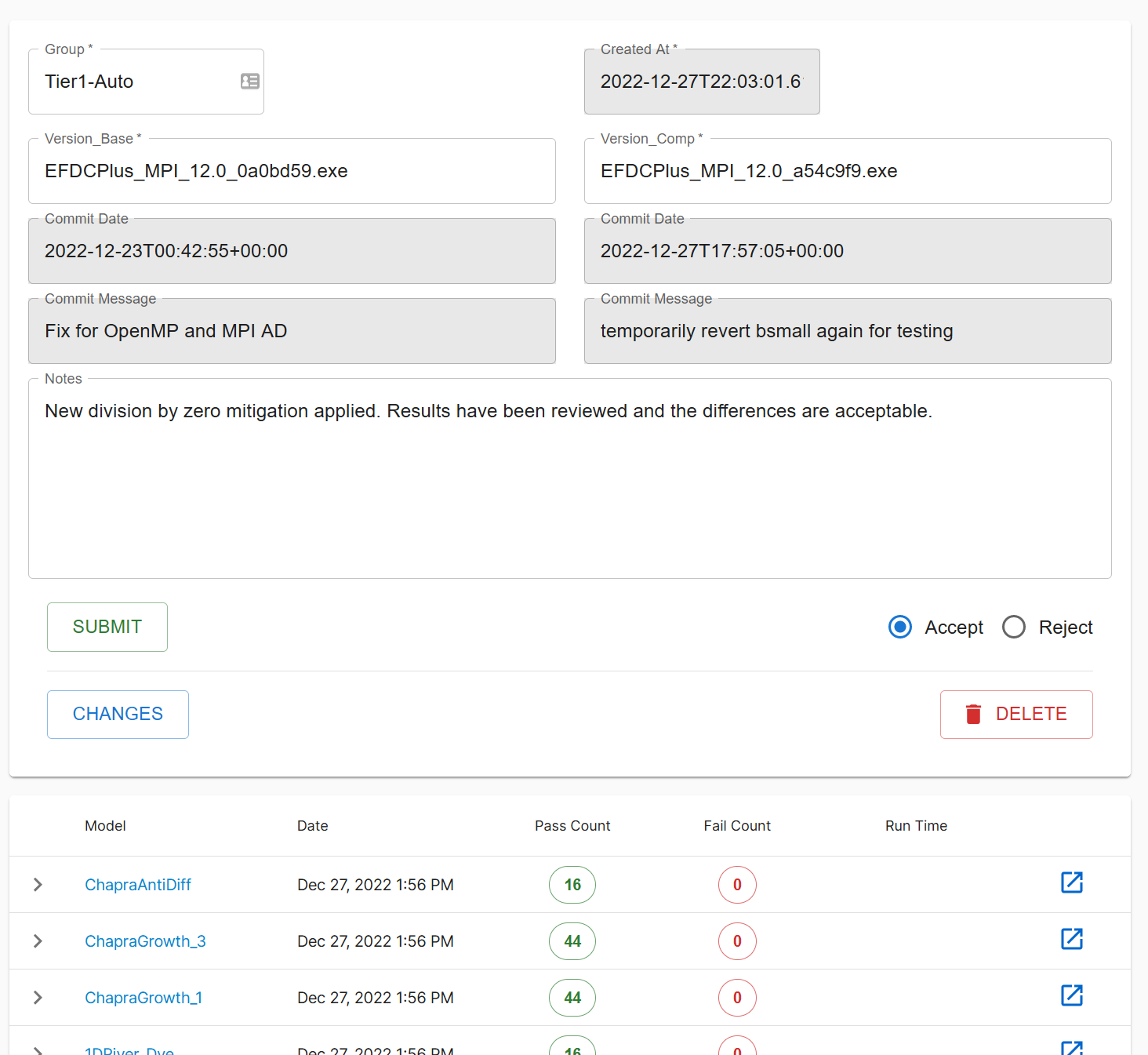

- As each model is compared to its reference, the results are collected and stored in a database. Through a custom front-end (Figure 2) we can review results, look at the test history for a specific model, make comments, and even view code differences between two builds.

In following blogs, we will present information about our test models, their selection criteria, parameters that we evaluate, and code pass/fail criteria. We will also invite suggestions on providing the models that we can add to our testing suite.